For most of my .Net solutions, I define the database in a database project (SQL Server Data Tools or SSDT) in the solution and use that to deploy to database server(s). For additional QA, using Azure Pipelines, I often spin up a localdb instance and deploy the database to it, after which I can run some (unit) tests on it. One of those tests checks the database for Invalid objects. Recently I caught the pipeline reporting Invalid objects (and thus build failures), while the database was actually working absolutely fine. What was going on here?

A bit of background

A .sqlproj holds all tables, views, functions and stored procedures that make up my project’s database (or databases). SSDT does a reasonable job compiling and verifying the database model, but has its shortcomings. Details are topic for another future blog post perhaps, but in short: it does not catch all problems and sometimes the project will build fine in Visual Studio, but still fail when deploying to e.g. a local mssql development database because of uncaught errors.

Because every database change is going to be deployed to my dev database during development at some point, so I am pretty likely to catch those errors ahead of releasing the software. But to assist in making sure, I am growing a habit of extending my Azure Pipeline with some extra steps.

First of all, building the database project from the pipeline. That part is quite simple, something along the lines of:

- task: MSBuild@1

inputs:

solution: '**\*.sqlproj'

displayName: 'Build Database project'

Second, try spinning up a sqllocaldb (which are installed by default on windows-latest vms) and deploy to it. That deployment may fail even if the prior build succeeded.

- task: PowerShell@2

inputs:

targetType: 'inline'

script: 'sqllocaldb start mssqllocaldb'

displayName: 'Start mssqllocaldb'

- task: SqlDacpacDeploymentOnMachineGroup@0

inputs:

tasktype: dacpac

dacpacfile: Database\bin\Debug\$(databaseName).dacpac

targetmethod: connectionstring

connectionstring: $(connectionstrings__NameOfConnStrInAppSettingsJson)

displayName: 'Deploy $(databaseName) to localdb'

If by this time we’re still having all green check marks, take it one step further. Have a .NET unit test project that features a DatabaseTests class. This test connects to the database and runs a series of SQL commands to discover objects in the database that are marked Invalid by SQL Server. The query to do that, I borrowed from this excellent DevArt blog post. The test opens a SqlConnection, runs the query using a SqlCommand and checks the results using a SqlReader.

[TestMethod]

public async Task HasNoInvalidObjects()

{

using var command = GetSqlCommand(sqlFindInvalidObjects);

using var reader = await command.ExecuteReaderAsync();

Assert.IsFalse(reader.HasRows, reader.HasRows ? $"There should not have been invalid objects, but found:\n{DumpReader(reader)}" : "OK");

}

The query is supposed to return all invalid objects, so: if it returns an empty result set, we are happy and all is well, until…

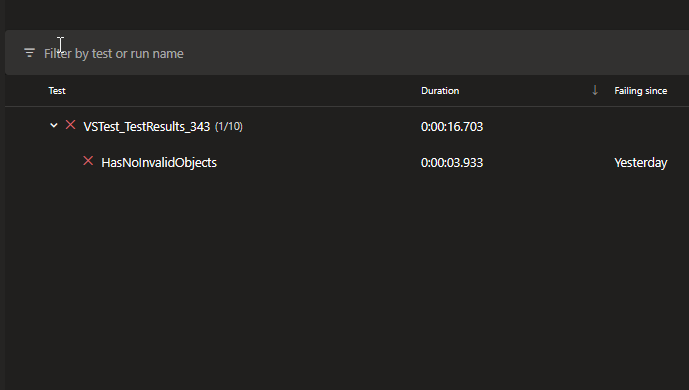

Build failed – There should not have been invalid objects, but found …

Starting test execution, please wait...

A total of 1 test files matched the specified pattern.

Failed HasNoInvalidObjects [3 s]

Error Message: Assert.IsFalse failed. There should not have been invalid objects, but found:

[dbo].[uspAAA] Invalid object name 'uspBBB' P

Indeed, uspAAA internally at some point calls uspBBB using an EXEC uspBBB statement. But why is that considered invalid? There should not be a problem there. The uspBBB stored proc is present and working fine. I verified in the build logs that in the prior deployment task, uspBBB was created properly in the localdb instance, and ahead of uspAAA as well, so it is present when uspAAA is created. (No “… not found but the procedure will still be created”…). Moreover, the SQL script doing the test forces a sp_refreshsqlmodule call first on everything that is marked invalid, and only outputs results if something still fails after the refresh.

Just to be sure, I created a blank database on my dev machine and redeployed from scratch – the unit test succeeds. I ran the query manually from the SQL Management Studio – empty result set.

Argh, why does it fail in the pipeline?

Schema name to the rescue

The cause turns out to be the not specified schema name in the EXEC statement. The first procedure looks roughly like this

CREATE PROCEDURE [dbo].[uspAAA]

AS

BEGIN

-- ...

EXEC uspBBB

-- ...

END

Since both procedures reside in dbo it is not required to specify the schema. It works absolutely fine as long as the user calling it, has dbo as their default schema, which is true for every user in my application. Including the user that runs the unit tests locally on my system.

It looks like this is not true for the LocalDB instance on the windowslatest vm in Azure Pipelines. I have not investigated that further, if anybody knows for sure and can point me to documentation on this, let me know in the comments.

There might be a way to specify the default schema for the user connecting to the localdb from the pipeline task and that may fix things. (Again, feel free to comment if you know.) But, for my case, I have repaired the test by explicitly inserting the schema:

CREATE PROCEDURE [dbo].[uspAAA]

AS

BEGIN

-- ...

EXEC dbo.uspBBB

-- ^^^^ inserted schema prefix

END

in the four procedures that were affected, which was a much quicker fix here (and would have been better from the start anyway).

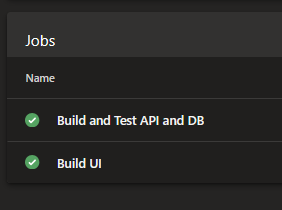

Green light all around now!

Geef een reactie